2019 is the year that will see AI’s broadcast industry journey from arcane, cutting-edge buzzword to being productised and commonplace completed.

The transition has been a remarkably short journey for AI, first really breaking through to widespread attention at IBC2017 and racing round the track of the hype cycle since.

Indeed, Gartner, the analyst that invented the hype cycle, plots an AI Maturity Model that begins at Level 1 with ‘Awareness’ and ends at Level 5, which it tags as ‘Transformational’.

You could possibly make the case that 2019 is when the broadcast industry starts to transition to Level 3, ‘Operational’, and “AI in production creating value by product optimisation or product/service innovations.”

One thing is for certain, and that is that AI exemplifies the modern marketeer’s favourite phrase of ‘disruptor’. So, what is driving it in the current industry: customer demand or R&D spend? According to Tedial vice president, products, Jerome Wauthoz, it’s both.

“Customers bring ideas to address immediate needs but first we analyse the customer’s pain points and challenges, and then we present solutions that answer those requirements,” he says, pointing to the company’s SmartLive live event production assistance tool as an example.

“In terms of AI, the chief current requests are: automatic metadata enrichment to give more context to ingested content; speech-to-text of commentary track or interviews; and face recognition, sentiment analysis and place recognition. Today, clients must hire people to log all this information and it is really costly. They seek to automate this process, so their production team can focus on creating value and not tagging.”

- On-demand webinar Jerome Wauthoz presents in Transforming live sports production with AI

Produce more

Efficiencies are very much the name of the game here, with Wauthoz saying companies are looking to constantly produce more, automate more, and are looking to address the demand for multiple deliveries in a more efficient manner and add more remote production capability at the same time

“AI will continue to grow in terms of automation in all aspects of the value chain,” he says.

“Not only for metadata enrichment but also in terms of workflow orchestration, error anticipation, consumption stats, ad management, and so on. Our solution is to combine different AI technologies together to create measurable end user value.”

EVS, meanwhile, is following a more R&D-centric approach, creating a dedicated AI innovation team that is looking to integrate AI into its existing products and services. This way, says the company’s marketing and communications manager, Sébastien Verlaine, EVS can deliver new features that customers might not even know they need but that provide them with more efficient operations, increased creativity, or let them achieve things that haven’t been technically possible before.

“For example, you can see how AI has enhanced our video assistant referee system Xeebra,” he says. “Using a neural network technique, our VAR system intelligently calibrates the field of play to accurately position graphics, like a 3D offside line to camera feeds. This is providing referees with a faster, easier to use, and much more accurate officiating tool.”

At last year’s NAB, the company showed an application that used AI-based techniques to generate intermedia images, allowing production teams to generate super motion footage from regular camera feeds. At this year’s, much is under wraps, though he says that there will be several new AI-driven applications and solutions on show alongside “a new software application that controls robotic cameras using AI and a showcase of how those AI-enhanced feeds can be ingested into our X-One unified production system.”

Hybrid approach

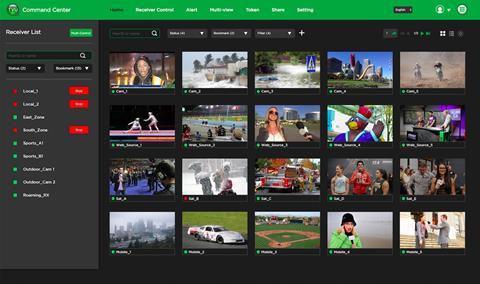

TVU Networks exemplifies the hybrid approach. On the one hand, it has developed a broad-based AI platform, which it calls the TVU MediaMind. On the other it is also developing specific solutions for specific tasks as and when warranted, such as the TVU Transcriber it launched at NAB New York towards the end of last year.

“I expect that we will continue to meet most customer requirements with TVU MediaMind and occasionally when a problem is very specific and it’s likely to be shared between many operators, then we will launch specific solutions built upon our core AI technology,” states Paul Shen, founder and chief executive, TVU Networks.

Shen makes the point that most of the companies TVU is talking to don’t have budgets for AI, they have budgets to solve problems and the way that those problems are solved is largely immaterial. That, though, may change over the next few years.

“The main trend is that AI will move from ‘last resort’ to ‘first thought’,” he says. “What I mean by this is that presently AI tends to be deployed when it’s the only logical solution. However, when operators have seen just how effective it can be, we will move to a situation in which the first question a CTO asks is “can AI solve this issue?”.

At Grass Valley, technical product manager, Drew Martin, adds broadcasting detail to the early progress on the deployment curve for the technology. He sees the main demand at the moment in addressing time- and resource-heavy tasks such as master control, metadata logging, and ingest; with the result that tasks like cutting clips for voiceovers in newsrooms can be sped up dramatically.

“With pressure to produce more content and personalised viewing experiences – and with lower budgets – content owners, broadcasters and media companies are looking for solutions that drive efficiency within their operational workflows,” he says.

“Eventually, the only human-centric tasks that we will see in broadcast environments will be the creative ones that ultimately result in rich storytelling.”

Interestingly, he also says that while the market is saturated with AI providers, there are few that aggregate. “This,” he says, “will be Grass Valley’s main focus.”

Brick Eksten, CTO Playout and Networking at Imagine Communications, adds analysis of audience behaviour to the demand for AI-driven production automation as a potential 2019 driver for the technology, especially in its role with enabling addressable advertising.

He also says that AI’s ability to recognise patterns in extremely large datasets will be able to enhance the way we work with systems throughout the industry.

It will be interesting to see how the new ML/AI subsystems that Imagine is building into its Zenium microservices architecture in operation at Sky Italia perform in that context. As Eksten explains, if AI is to deliver it needs to be part of a software-defined platform, and a microservices architecture is a good fit for this. This also enables the developer to avoid the ‘garbage in, garbage out’ trap.

“A microservices architecture has an orchestration layer which pulls together individual elements of functionality to perform a workflow, then immediately releases those software applications and the hardware resources on which they run for other workflows,” he explains.

“The intelligence sits in the orchestration layer, which is building those virtual machines. String together the correct microservices, orchestrate their operation and you have the natural data flow for ML/AI.

“Zenium orchestrates not only our microservices but those from third party developers, allowing users to create precisely tailored functionality for their workflows. Normalisation of control, data and timing allows the ML/AI sub-system to see and understand all of the data flows – and most importantly the timing associated with those data flows in real-time. This not only allows for precise learning, it also allows for greater predictability and more meaningful decisions.”

Sky Italia’s Zenium microservices architecture is currently using AI to enrich its compression and distribution operations. And because Zenium is an open platform, the broadcaster is also creating its own AI algorithms to optimise and automate the allocation of resources. The idea is that introducing AI and machine learning into the content data flow leads to new and unique functionality, the reduction of operating costs, and the reassignment of personal to more creative tasks.

“The temptation is to think of AI as a solver of all problems, when of course broadcast and media remains a people-driven, creative and innovative industry,“ he concludes.

“AI will be an effective part of the ecosystem, but we all have to develop an understanding of how it can improve our creativity, our operational efficiency, and our commercial viability.”

- Read more AI: A content chain analysis

No comments yet