From playout to post, the cloud is upending business models – but the most quantum leap is still to come.

Red Bee Media is building the first all software playout service with commercial launch as soon as Q2 2019.

First showcased at IBC2018, the solution is the first in the world to use uncompressed video over IP, without requiring any dedicated infrastructure.

It’s the result of two years R&D for the former BBC owned facility which still manages playout for all BBC channels. Now owned by Ericsson, headquartered in London and with eleven media international media hubs, Red Bee also plays out the linear services for ITV, Channel 4 and Canal+ as well as VOD services including All4 and My5.

“Just lifting what we do into private or public cloud would be an interesting engineering challenge but one that would not necessarily benefit customers,” explains Richard Cranefield Head of Portfolio, Playout.

What broadcasters want, he says, is the ability to launch new channels quickly – such as an extra children’s channel for Christmas - and to adapt services for existing ones to target different audiences or regions for a short period of time.

That type of business model isn’t possible with a traditional playout model reliant on single-purpose machines which need purchasing and installing many months in advance.

“For us the benefits of moving playout to the cloud would only be realised if you can move all of it,” says Cranefield.

Playout has evolved less quickly to the cloud than other areas of the business, like media management and post production. Elements of the playout chain like automation have been virtualised (made software-only) but Red Bee’s wholesale move would not have been possible a couple of years ago.

“To deploy a new channel quickly we need to have all the tools needed to run a channel in software rather than physical video servers, or logo inserters sitting in frames in a rack, or compliance recorders, transcoders, graphics insertors. All of those have been discrete appliances but we needed them all in software.”

All of this had to operate together and for any channel or service that a Red Bee client wants at the drop of a hat.

While significant advances have been made by vendors in replacing SDI with IP and adapting hardware-centric product to software, there were gaps.

Red Bee worked with long standing partners including Imagine Communications, Grass Valley, and Pebble Beach but says not necessarily all of them delivered for its cloud deployment.

“The vendor community are doing a great job at running interoperability tests of their own ecosystem and sometimes between each other’s product but you need that tech to work in your own environment,” he stresses.

That’s where the interop promise of IP is sometimes not matched by reality.

“Interop is nowhere near as simple as plugging one piece of kit isn’t another with an SDI cable. Now your software may not directly interface with another software. It will need connecting over the network fabric passing through two or more other vendor’s infrastructure. This is what our two years of testing has enabled us to achieve.”

Indeed, figuring out what vendor’s equipment interoperates well with another is part of Red Bee’s “intellectual property” he says and something Red Bee hope will give them a competitive edge.

“Working on the cloud is the future.” Arvind Sond, Inversion Studio

“We’re also not vocal about saying which kit specifically we are deploying because we don’t see this as a design set in stone.”

Red Bee wants the ability to swap software in and out depending on whether further software advances make better use of CPUs or network bandwidth or are more cost effective.

Its service will combine public cloud (from Microsoft Azure among others) and private cloud (built at some of its data centres) although it will use public cloud for the more bursty OTT services and private cloud for playout due to the level of complexity and bandwidth needed for a playout.

“Because of the amount of live events we carry which need to work in low and predictable latency we need to work with uncompressed video,” says Cranefield. “We have got uncompressed streaming at 1.5 Gbps so doing compressed would be easy.”

There is no launch customer although it is talking with all its existing clients about migration. UK broadcasters are ahead of the pack.

“Devising the right commercial model was as hard, if not more so, than getting the actual technology to work,” he says.

It has persuaded vendors to switch from selling product as a one-off capital outlay to being an ongoing operational expense. At the same time, it had to work out a rate card of predictable pricing for clients.

“They will rent capacity at a known price to help them make business decisions much quicker.”

Rebuilding post production in the cloud

Long anticipated, the ability to collaborate in realtime with teams in multiple geographic locations is a reality that is altering the post production landscape.

It is VFX which has led the way. The computing muscle of banks of computers has been routinely called on to render frames but it has only been the largest facilities which have been able to either accommodate the horsepower on site or to pay for spinning up additional machinery in the cloud.

MPC did this on its way to winning an Oscar for The Jungle Book, thanks to being able to run millions of Core hours on Google’s cloud.

So established has the cloud rendering process become, that even this once ground-breaking capability has evolved to encompass a whole host of post production workflows from pre-viz to transcoding. In doing so, the field has also opened up to smaller shops and even freelancers.

One of the key benefits is not needing to buy or maintain expensive hardware to run a business: everything runs in the cloud, with only the display being streamed to the user.

“The cloud can essentially offer a pop-up studio, accessible anytime, anyplace across the world,” says Jon Wadelton, chief technology officer at Foundry.

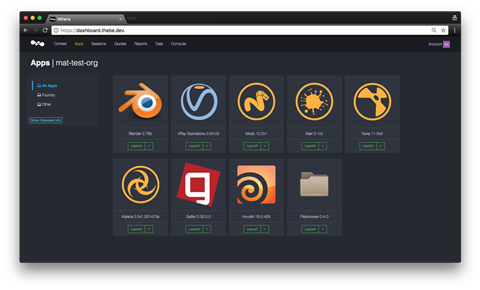

This year Foundry launched Google cloud-hosted platform Athera intended to make it possible to run a visual effects studio using nothing more than a laptop and a standard web browser.

“The lightbulb moment for me was having a 2 Gig Lidar scene loaded to Google Cloud and running on Nuke,” explains Lindsay Adams, VFX Supervisor at Sydney’s Future Associate which is working on VFX for HBO’s serialisation of graphic “The lightbulb moment for me was having a 2 Gig Lidar scene loaded to Google Cloud and running on Nuke,” explains Lindsay Adams, VFX Supervisor at Sydney’s Future Associate which is working on VFX for HBO’s serialisation of graphic “The lightbulb moment for me was having a 2 Gig Lidar scene loaded to Google Cloud and running on Nuke,” explains Lindsay Adams, VFX Supervisor at Sydney’s Future Associate which is working on VFX for HBO’s serialisation of graphic novel Watchmen. “When I animated a virtual camera and sent that to the compute stack a result came back within 10 minutes. I had access to more than a thousand machines from my hotel room from my Macbook Pro.”Macbook Pro.”Macbook Pro.”

Sohonet has a competing service, used by Milk in its Oscar-winning work on the sci-fi Ex Machina.

CEO Chuck Parker says the technology has now evolved to a point where a post company might employ only a dozen managers and project supervisors on its books. “They can bid with confidence on jobs of any scale and any timeframe knowing that they can readily rent physical space in any location, anywhere in the world, to flexibly take advantage of tax breaks and populate it with freelance artists,” he says.

With file sizes set to continue to increase and as immersive media like VR emerges, leveraging the cloud will not only be routine for the highest budget projects and largest vendors.

“You can have big budget artists and use big budget renders but not have the big budget facility,” says Austin Meyers, Associate Director at LA post house Steelhead. “It will be a major turning point in the industry.”

Widescale adoption of this technology may not happen overnight, but we’re already seeing the beginnings of it with early pioneers like Inversion Studio.

“The most useful thing is being able to access our files and footage anywhere in the world and use talent anywhere in the world,” says Arvind Sond, co-founder of Inversion Studio. “Working on the cloud is the future.”

Quantum cloud computing

We are on the verge of an era of unprecedented data in which the volumes generated will be so incalculably large that current computing technology will buckle and break under the strain.

The race is on to build the first generation of quantum computers which promise souped-up calculation speeds over existing computers and to supercharge developments in artificial intelligence.

Quantum engineering began a few years ago in anticipation that Moore’s Law, a pretty consistent theory of computing power, was about to hit its shelf life.

Moore’s Law predicts that the number of transistors on a microprocessor would double every 18 months but in the next few years, and perhaps as soon as 2020, it will be practically impossible to build semiconductor transistors any smaller – using current technology.

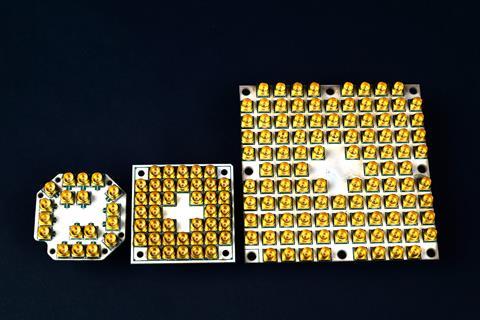

Quantum computers will be measured on the atomic scale using the power of atoms and molecules to perform memory and processing tasks.

“Microsoft and others are working on quantum computing,” Microsoft co-founder Bill Gates wrote on a Reddit blog. “It isn’t clear when it will work or become mainstream. There is a chance that within 6-10 years that cloud computing will offer super-computation by using quantum.”

In 2015, Google claimed it had developed a quantum computer capable of processing 100 million times faster than today’s systems. A year later boffins at Massachusetts Institute of Technology (MIT) and the University of Innsbruck in Austria announced the creation of the first scaleable quantum computer.

Last November IBM released a quantum computer cloud service, only to be trumped in March by Alibaba Cloud and the Chinese Academy of Sciences, which also launched its first cloud-based quantum computing.

IBM, though, has an upgraded version in the works as do Intel, Microsoft and more.

At CES 2018 in January, Intel CEO Brian Krzanich predicted that quantum computing will solve problems that today take months or years for the most powerful supercomputers to resolve.

Quantum computations use quantum bits (qubits), which like the famous Schrödinger’s cat thought experiment, can be in multiple states at the same time – quite different from digital computing’s requirement that data be binary (either in one state or another, 0 or 1). Running a large number of calculations in parallel in the cloud opens a future where complex problems can be solved in much less time on a quantum computer compared with a traditional digital device.

But while quantum computing has great potential, the field is in its infancy. And it will take many generations of qubit increases for quantum computers to begin solving the world’s challenges.

“In the quest to deliver a commercially viable quantum computing system, it’s anyone’s game,” said Mike Mayberry, corporate vp and md of Intel Labs. “We expect it will be five to seven years before the industry gets to tackling engineering-scale problems, and it will likely require 1 million or more qubits to achieve commercial relevance.”

With current quantum computers such as Alibaba’s capable of a mere 20 qubits you begin to realise the magnitude of the task. We won’t be using a quantum PC on the desktop anytime soon.

No comments yet