Light field is seen as the cutting edge of immersive tech and a new industry organisation is looking to standardise the technology. James Pearce speaks to IDEA president Pete Ludé about what light fields bring to VR.

Science fiction has long shown us a different kind of immersive technology to that which we’re seeing at the moment. Remove the virtual reality glasses and instead step onto Star Trek’s holodeck, where lifesize and photo-real holographic images interact, move around you, and seem almost real.

IDEA - an industry body that was founded earlier this year – is looking to bring the media and broadcast tech industry to use light fields to make this kind of immersive technology into a reality.

The Immersive Digital Experiences Alliance (IDEA) is a non-profit industry alliance “working towards developing a family of royalty-free technical specifications that define interoperable interfaces and exchange formats” that will “support the end-to-end conveyance of immersive volumetric and/or light field media”.

Launched in April 2019, Alliance members include the likes of CableLabs, Charter Communications, Cox, Light Field Lab, Otoy and Isby.

“It’s a small group of thought leaders in this new area of immersive media,” explains IDEA chairman Pete Ludé when he sits down with IBC365 at SMPTE2019.

IDEA is the result of a number of companies “talking over the past few years behind the scenes, sharing notes with one another, and recognising some common missions, goals and requirements for light field technology.”

IDEA currently has six members (mentioned above) but is open to speaking “to a variety of stakeholders that are interested in immersive media” he adds. This doesn’t just include technology companies.

“It is the people that are making media,” explains Ludé. “It is the creative leaders. We have a liaison with the American Society of Cinematographers and we are developing others because we want to get those viewpoints in.”

This includes network operators too. American cable companies Charter and Cox are already on board, but Ludé wants more network operators because it is “quite feasible to come up with an interchange standard… impractical for actual network conveyance” he explains.

“We want to hear from the people making the content, the people making the technology, and those operating the networks. Having all those voices come together will help make sure that we’re inventing the right thing.”

So what is the “right thing”? Holograms already exist as projections, after all, and so does light field technology.

In fact, the concept behind light field isn’t new. Ludé explains: “When you see how light field display works, you can realise ‘Okay, this is real science.’ And this is something that’s been understood for over 100 years, Gabriel Lippmann invented this stuff going back to 1909.

“Our common goal here is driven from the invention of this new light field or panoptic - sometimes called holographic - technology,” says Ludé.

“If we’re doing AR or VR, this is a relatively minor extension on to what’s going on now,” he adds. “3D computer graphics [are developing], and there a lot of standards that are out there that are handling that pretty well and they’re going to continue to move along.”

With light field, you’re not just taking a flat array of light coming off in front of a TV set, you’re taking what could be thousands or even millions of rays of light rays that could be going in all sorts of different directions.

“By doing that, it gives you the full perception. Now I’m looking through a window I’m not looking at a display anymore. If I move around, it moves with me. You have different viewpoint lighting, too.”

Viewpoint dependent lighting would mean if you moved to a different place or angle, the light and shadow of an object would change with it. If you move towards a pond, for example, it may start sparkling. Or if you moved towards a window on, for example, a vehicle, a reflection might appear in it.

If this sounds much more complex than current VR offerings, that’s because it is.

Ludé says: “It greatly complicates the way that you need to represent the image. At IDEA, what we’re really trying to do is to start there and say we want a file format, and a stream that could convey media and the most immersive possible way, which is light field imaging.

“If we can do that, then we could render that into subsets for AR for VR for stereoscopic movies.”

Seeing the light

Given the relative newness of the organisation, IBC365 is keen to know why IDEA has been formed; what is the application or the logic behind creating a new industry body to standardise this technology?

“We don’t want to have to reinvent anything,” he replies.

“The timing is very good right now, because there is already a very good vocabulary, a set of standards and metadata related to 3D and volumetric modelling. This comes out of computer graphics, it comes out a photogram tree, it comes out of stereoscopic capture. This is where people will use light hours and time of light cameras and camera arrays for photograph imagery.”

What is missing, he adds, is an “overarching framework” that would allow the industry to take these assets to build full light field images.

“It just hasn’t been necessary before,” says Ludé. “3D models have been built on computers but that is very different from an image captured with light field.”

To that end, IDEA published its first draft of its specification in October – a “huge milestone” for the organisation, according to Ludé. “We’re calling it a 0.9 version, meaning that we know it’s a draft and it’s not totally complete.”

- Read more: Interview: Paul Debevec, Google

This first version is split into three different documents, which are all available to peruse on IDEA’s website. The main function is the “scene graph” which allows a user to build up different assets.

“We also have the container format for that and the date encoding spec. Collectively, it’s almost 250 pages of information for people to absorb.”

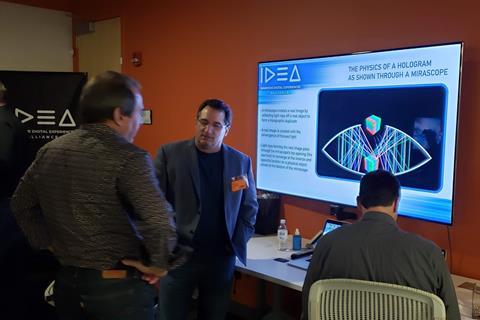

IDEA has been taking the first draft of the Immersive Technology Media Format (ITMF) on the road, with a demonstration at the Display Summit Conference in Colorado last month.

To give attendees a preview of the display-agnostic possibilities of the format, a series of demonstrations were created by Charter Communications and CableLabs engineers with the support of IDEA founding members. These new demonstrations of the ITMF standard were conducted over two days – October 8th and 9th at the same venue.

For the first-ever demonstrations, a set of ITMF image files was created, and then rendered into different display devices, including VR, stereoscopic and volumetric displays. The ITMF file included 3D models, textures, cameras and a wide variety of parameters within the scene graph of the file. In addition, multiple render targets were used to designate individual display profiles.

Ludé was there to demonstrate the standard. He says: “It was a very good step to actually put that together. Now we’re going to continue all that work. Now that we have the 0.9 spec, we’re going to be looking at the feedback we’ve received from that and seeing how we can develop ITMF.”

Future challenges

One challenge he admits they need to work on is that light field will mean significantly more data packed into images. So a full video file using the technology will be massive. How massive?

He explains: “One challenge is the huge amount of raw data that it could encompass because we’re talking not about megabytes or gigabytes, we’re talking about terabytes, or exabytes.

If you’re doing a light field and you have a certain panel, with 8K, or even 4K resolution, instead of one data word for each pixel, I might have 500,000 data words for that one pixel. One pixel is really emitting light rays in different directions.

But, he adds, the benefits in terms of creating an immersive experience could be huge.

“With this, we’re creating a light ray that allows me is to literally focus on any dirt from the screen within a certain window limit,” says Ludé. “And that just means a whole lot more data.

“We want to support things like 66 degrees of freedom, meaning that I might be moving to the left or right and as I do, so, the parallax is shifting, and things have different disparity angles and different parallax and I’m looking at things with different focal points in my eyes could focus on this object or this object.” This, he adds, would give a much more realistic VR experience.

So what are the applications? Ludé says he is most interested in the entertainment standpoint and thinks the first use cases that will arise may be in theme parks. Holographic images are already commonplace on theme park rides – think the Harry Potter rides at Universal Studios in Florida, for example – but according to Ludé, these images are “too fuzzy” at the moment.

“The contrast ratio can be terrible, even though the concept is compelling,” he adds. “But what we can have is a massive display, instead of a projector, which shines light rays out giving a much clearer image. That’s the perfect controlled environment as you don’t have some of the challenges of the consumer market.”

This could then be extended out to VR use cases in retail, before a fall in costs would see it become common in home entertainment.

“Imagine there is no headset, no backpack, no batteries, no constraint ceiling,” he says excitedly. “You’ll walk in and you swear that you’re looking at this gigantic whale or there’s a well-known character looking at you. It could be a concert and you’re going to see Britney Spears you’ll swear they’re real because they’re right there, you could walk around and look at them and they look at you.”

And then after that, the holodeck in your home.

No comments yet