NAB 2018: Vendors showcase the tools forging the post-production evolution with artificial intelligence (AI) powering the future of editing, grading and visual effects (VFX).

These trends are already fusing together for more automated and, in theory, more creative production, with stronger firepower to handle massive data, and the cloud.

Greater collaboration, for example, which everyone agrees is a good thing both in terms of creativity and productivity, is being enabled by accessing work in progress and the tools to manipulate it with online.

Software has been separated from bespoke hardware to such an extent that the monolithic editing and finishing packages of old have been broken down into their constituent elements and interconnected with other tools offered through the cloud.

This means facilities can pick and choose the tools they need for a particular job and call up the virtual servers to run it on depending on the data density or turnaround time required.

New business models from vendors reflect the opportunity for customers to pay as they go with subscription fees increasingly common. Incremental software updates are released online.

With more productions filming up to 8K to provide the greatest flexibility in post there is increasing demand for ways to edit multiple streams of that footage at full rather than proxy resolution.

Workstations loaded with Nvidia, Intel or AMD processors have arrived to address this and make it increasingly possible to play back, stitch and post-process the raw content of massive data streams for near-set editing, VR and, a little down the line, the computational cinematography derived from light fields.

Moving the mundane to machine automation

Crunching the data, especially in fast turnaround news and sports workflows, is a headache which AI or Machine learning (ML) is designed to tackle.

AI/ML tools are being integrated into ingest and production asset management systems from all manner of vendors – Tedial, Dalet, Avid, TVU Networks and Piksel among them – designed to maintain efficiency and curate and extract vast quantities of metadata.

During a NAB conference session on the topic, USC School of Cinematic Arts professor Norman Hollyn pointed out that editors had once resisted the advent of digital nonlinear editing in the 1990s. “AI is bringing things into the post production world and if we don’t start to look at and embrace them, we’ll be ex-editors,” he said.

“AI is about assisted intelligence,” insisted Amazon Web Services M&E Worldwide Technical Leader Usman Shakeel at another NAB conference session.

“Different aspects of the workflow exist across different organisations, systems and physical locations,” he said. “How do you make sure it’s not lost? ML-aided tools can curate disaggregated sources of metadata.”

Aside from the more technical processes of discovery, curation and basic assembly the more interesting question is whether machine learning can replace human creativity. “Can content ever create itself?” posed Shakeel.

“You’ll see lots of mass-produced content that’s extremely automated; higher level content will be less automated” - Yves Bergquist

There is already work afoot on automating sentiment analysis, or the ability to search based on a video or audio clip’s emotional connection.

Yves Bergquist Chief Executive at AI firm Novamente informed that he is creating a “knowledge engine” with the University of Southern California to analyse audience sentiment across TV scripts and performance data.

“The aim is to link together scene-level attributes of narrative with character attributes and learn how they resonate or not with audiences,” he explained. “There’s an enormous amount of complexity in the stories we should be telling. You [Hollywood’s studios] need to be able to better understand the risk.”

In the long term, he forecast, “You’ll see lots of mass-produced content that’s extremely automated; higher level content will be less automated.”

The vendor’s viewpoint

Avid is one of the cornerstones of the industry; the defacto standard for editing and a hub for integration of a wide range of other manufacturer’s products. Arguably its main news at NAB however was around price.

It has steadily offered entry level versions of its software for free with the aim of onboarding new generations of post-production talent and persuading them to upgrade to more advanced versions.

Media Composer First, for example, has been downloaded 100,000 times since launch a year ago. Now it has made the more advanced Media Composer available at a lower subscription price of $20 (€16) per month.

For larger facilities, there’s Media Composer Ultimate, which throws in every high-end feature including Project Sharing and Shared Storage Management. Audio package Pro Tools is similarly now marketed in free, advanced and Ultimate versions plus the company announced a free starter pack of its music notation tool Sibelius.

All of these products are now available from the cloud, specifically Microsoft Azure, and badged as Avid On Demand. This includes a new set of AI tools for automated content indexing, for jobs such as closed captioning verification, language detection, facial recognition, scene detection, and speech-to-text conversion.

Adobe describes its AI, Sensei, as a combination of “computational creativity, experience intelligence and content understanding” and continues to apply it to Adobe Premiere Pro.

This time it enables analysis of colour and light values from a reference image which in turn are applied to match a current shot. The final adjustment can then be saved as a ‘Look’ which can be applied to other shots. Face detection capabilities can also assist in skin tone matching.

Premiere Pro’s latest audio features allow more sound manipulation to be done within the NLE. For example, an ‘autoducking’ function, driven by Sensei, will automatically adjust soundtrack audio around dialogue, for single clips or an entire project, with creates automatic envelopes around dialogue and sound fx which can be manipulated and keyframed as desired.

Editors working with VR/360 video in both Premiere Pro and After Effects benefit from being able to view their work in Windows Mixed Reality head-mounted VR displays. Flat graphics can also be transformed into a 360-degree spherical format and there additional transitions, effects and viewing options.

New camera formats support in the software is for Sony’s codec X-OCN, Canon Cinema Raw Light and RED’s post pipeline.

Raw 8K video content is currently the most advanced standard for video playback but current playback solutions are often unable to display these frames at full frame rate (30fps). As a result, video playback or editing is often jerky and slow, leading to longer editing times and user frustration.

Adobe says it has fixed this by running Premiere Pro CC on machines fitted with AMD’s Radeon Pro SSG processors. Editors can now work with multiple streams of uncompressed 4K or 8K footage “as smoothly as they could with lower-quality proxy files”.

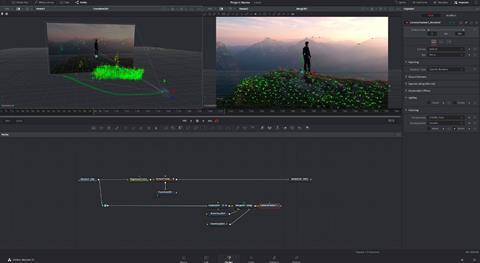

Showing increasing signs of encroaching on Adobe and Avid territory, Blackmagic Design gave finishing software DaVinci Resolve a new module that adds visual effects and motion graphics tools into the workflow. Previously available as a stand-alone application, Fusion is being integrated into Resolve 15 with the final version ready in about 18 months.

Colorists can play with a new HD to 8K up-scaling feature and expanded HDR support with Dolby Vision metadata analysis and native HDR 10+ grading controls.

Undoubtedly the leading colour correcting tool and including audio software culled from its acquisition of Fairlight, Blackmagic now claims that Resolve is “the fastest growing nonlinear video editor in the industry.”

There’s a downloadable free version of DaVinci Resolve 15 and a Studio version which adds multi-user collaboration, support for frame rates over 60p, VR, more filters and effects costs €245) with “no annual subscription fees or ongoing licensing costs.”

In the direction of Avid and Adobe, Blackmagic states that Resolve 15 Studio “costs less than all other cloud-based software subscriptions and it does not require an internet connection once the software has been activated. That means customers don’t have to worry about losing work in the middle of a job if there is no internet connection.”

In addition, BMD introduced a second version of the Cintel telecine costing €21,000. This one includes a Thunderbolt 2 connection capable of scanning 35mm negative film directly into Resolve for immediate digital 4K HDR conversion. Support for 16mm HDR scanning will be added later.

ProRes goes RAW

In 2007, Apple introduced the Apple ProRes codec, providing real-time, multistream editing performance and reduced storage rates for 4:2:2 and 4:4:4:4 video. Now, it’s gone one better and developed ProRes RAW, based on the same principles and underlying technology as the existing codec, but applied to a sensor’s pristine raw image data rather than conventional image pixels.

Use of raw information is ideal for HDR content creation but existing workflows can be cumbersome and data intense. The extent to which you should get excited depends a lot on whether you work with Apple Final Cut Pro as a finishing tool. If so, then this will make life a lot easier. Cameras supporting the format from launch include Sony’s FS5 and FS7, Panasonic EVA1 and Varicam LT and Canon C300 Mk II.

There are two compression levels: Apple ProRes RAW and ProRes RAW HQ with additional quality available at the latter’s higher data rate. Compression-related visible artefacts are “very unlikely” with ProRes RAW, and “extremely unlikely” with ProRes RAW HQ, states Apple, which also presents bench tested comparisons on playback and rendering with Canon and RED’s raw video formats in a white paper.

Atomos Sumo 19” and Shogun Inferno recorder/monitors are currently the only devices to offer ProRes RAW recording (as a firmware upgrade). Drone and gimbal maker DJI is also making its equipment compatible with the new format.

Also new from Atomos is a smaller version of the Shogun Inferno intended for carriage on mirrorless cameras and DSLRs and costing €560. The Ninja V is 5.2”, weighs 320g and has a HD display suitable for use in bright sunlight. The device is capable of recording and displaying UHD and HDR, in both PQ and HLG standards, with output to mini SSD drives.

With HDMI 2.0 connections, however, it cannot record in ProRes RAW (plain ProRes or Avid DNxHR instead) – but Atomos promise it will do as and when cameras are introduced that can output the format via HDMI.

When productions can be on-location for two or three months recording three to four terabytes per day of footage from multiple cameras are not unusual and neither are bottlenecks in workflow. To the rescue comes a new G-Tech range of SSDs from Western Digital, judged by the firm as the world’s most powerful.

With transfer rates of 2800MB/s, the G-Drive mobile Pro SSD can help filmmakers edit multi-streams of 8K footage, quickly render projects at full resolution, and transfer as much as a terabyte of content in less than seven minutes, it states.

This comes with a single Thunderbolt 3 port and in capacities of 500GB for $660/€524 and 1TB ($1050/€850). An even more powerful version with over 7TB capacity and dual Thunderbolt 3 ports to daisy-chain up to five extra devices will cost over €6000.

British VFX tools developer Foundry has been developing a cloud-based VFX platform for a while and finally released it under the name Athera, backed by Google as the cloud provider. Particularly aimed at smaller facilities which may lack some of the firepower of the bigger beasts like DNeg or Cinesite, Athera centralises storage, creative tools and pipelines for on-demand access. VFX freelancers hired by studios may also enjoy the flexibility this allows.

The tool set is populated by Foundry product including Nuke, Katana, Modo and Mari but other packages are available too such as software from SideFX, Blender and Chaos Group. Two clear omissions at this stage are Adobe (which showed interest in acquiring Foundry a couple of years ago) and Autodesk.

The cloud is increasingly being used for more and more parts of a VFX project’s construction.

MPC’s VFX Oscar winning work for The Jungle Book was greatly assisted by being able to call on Google GPUs for rendering and therefore negate the cost and bottleneck of trying to wrangle this within the facility itself. Athera, though, is the industry’s first end to end commercial cloud offer and all Foundry needs now are some juicy reference case studies to prove its worth.

Mistika Review from SGO provides real-time playback and review of VFX shots or clips, supporting 8K, High Frame Rates and VR projects. It allows project leaders to check the work’s quality and status, change the speed, frame rate or zoom in and sign off their work remotely. It will be available soon, like so much of the post tools sector, on a subscription basis from €19 a month.

![Adeline Platform Help[64]](https://d3dh6of9cnaq4t.cloudfront.net/Pictures/100x67/0/6/9/30069_adelineplatformhelp64_996092_crop.png)

No comments yet