Is VR just a stepping stone on the path to holographic video content creation and display?

Holographic virtual reality has been part of popular culture at least since Princess Leia stored her holo-selfie onboard R2D2 in 1977 and the Holodeck began to make regular appearances in Star Trek TV series from 2001.

There are those who believe that holographic video is on the threshold of a major breakthrough that will transform the future of media.

“Our goal is to achieve what science fiction has promised us, and science has yet to deliver,” says Jon Karafin, CEO, Light Field Lab and former head of video at camera maker Lytro, the nearest any company has come to making light field capture mainstream.

“We are on the verge of making this happen and not just for entertainment – it will be ubiquitous.”

In theory, holographic video, or holographic light field rendering, produces stunningly realistic three-dimensional images that can be viewed from any vantage point.

This now has the attention of computing powerhouses Microsoft, Facebook, Apple and Google.

At heart is the nagging belief that current models of VR and AR, which require consumers to wear some form of unnatural head computer, risks development going the same way as 3D TV.

Google, in particular, sees the creation of light fields, which it describes as a set of advanced capture, stitching, and rendering algorithms, as the solution to ultra-realistic VR. It has a light-field camera in development and just launched a Light Fields app for viewing on the SteamVR game platform.

“To create the most realistic sense of presence, what we show in VR needs to be as close as possible to what you’d see if you were really there,” explains Paul Debevec, Google VR’s senior researcher. “Light fields can deliver a high-quality sense of presence by producing motion parallax and extremely realistic textures and lighting.”

In truth, the industry has been pushing toward holography for some time.

On a continuum from stereo 3D to glasses free autostereoscopic displays, 360-degree virtual reality and mixed reality headsets like Microsoft’s Hololens and Magic Leap, the aim is to bring digitised imagery closer to how we experience reality.

You could of course extend the artistic line as far back as the dawn of cinema, the first photograph, impressionism, religious iconography and the cave painting. Or as far forward as being able to experience holograms on our mobile phones, a development thought possible if processing for encoding and decoding is incorporated into the emerging 5G network.

“The dream has always been to display objects that are indistinguishable from reality,” says Karafin.

“The light field contains the computational construct for the way we see the natural world around us.”

There are two ways to create a hologram. The first is using a plenoptic array of cameras, ideally recording all the light traveling in all directions in a given volume of space.

The second is to create a 3D model of a scene which might be represented as a polygon mesh or a point cloud.

Either way, computation is not problematic, according to V. Michael Bove, Principal Research Scientist and Head, Object-Based Media at MIT.

“Once you have a plenoptic image you use a Fourier transform (signal sampling algorithm) and its’s pretty simple to display it as a hologram. This is hugely straightforward and easier than take a CGI model and rendering it to produce the same image.”

Karafin defines a hologram “as a projection of the encoding of the light field”, a formula that differs significantly from that of Magic Leap which says it uses “lightfield photonics” to generate digital light at different depths and will “blend seamlessly with natural light to produce lifelike digital objects that coexist in the real world.”

”Many companies often claim to be light field, but rather are multi-planar stereoscopic,” says Karafin.

Other research has focused on volumetric display, typically using the projection of laser light onto smoke or dust. Karafin says this is not the same as true light field and has limited range, colour and resolution.

Holographic display

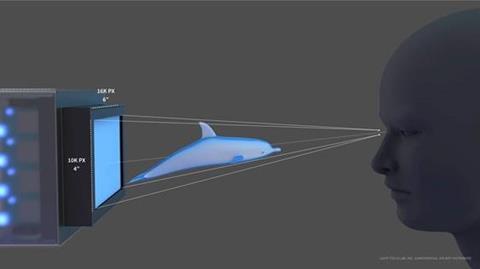

Light Field Lab, he explains, is building a true holographic display with which the viewer won’t need to wear any diverting headgear, cabling or accessories.

“A true holographic display projects and converges rays of light such that a viewer’s eyes can freely focus on generated virtual images as if they were real objects.

”You will have complete freedom of movement and be able to see and focus on an object no matter the angle at which you view it. Everything you see is free from motion latency and discomfort. In the holographic future, there will no longer be a distinction between the real and the synthetic.”

Backed by multi-billion dollar VCs (to the tune of several million dollars to date), the Silicon Valley start-up is busy showing select investors prototypes of a flat-panel holographic monitor.

The prototypes start as a piece of the larger architecture at 6 x 4 inches, but already projects video at 150MP. The future product roadmap manufactures larger-sized modules, at least 2 ft x 2 ft, designed to be stacked into a “video wall”.

Light Field Lab will enter the ‘alpha’ phase of development in 2019 with a view to launch into location-based entertainment including theme parks, concerts, and sporting events.

“Ultimately, we have a roadmap into the consumer market,” says Karafin. “But with that said, there’s quite a bit of technology maturation that needs to happen before we go there in a significant way.”

One issue with holographic monitors is maintaining data rates. Each installation will require dedicated GPU computing and networking.

Sceptics may look at the fortunes of Lytro and wonder if history is repeating itself.

Before setting up the firm 18 months ago, Karafin and co-founders Brendan Bevensee and Ed Ibe helped to commercialize light field photography. After building a stills camera with microlens array, Lytro engineered the technology toward VR in 2015 with launch of the Immerge camera and also prototyped a cinema camera for VFX work but ultimately without success. It went out of business in March.

Karafin suggests that holographic displays will help ensure the success of light field capture technologies.

“To be successful with any light field acquisition system, you need to have a native way to view it,” he says.

“I’m not over exaggerating when I say that in every meeting we have had with content creators in Hollywood over the past few years, we were asked ‘when do we get a holographic display?’”

How to create a hologram

Provided sufficient information about an object or scene is captured as data then it can be encoded and decoded as a holograph by Light Field Lab’s invention, he says. That means that holographic content can be derived today from existing production techniques and technologies.

”Studios are already essentially capturing massive light field data sets on many productions. Some of this information gets used in postproduction, but when it is finally rendered as a 2D or a stereoscopic image, the fundamental light field is lost. It’s like creating a colour camera but only having radio to show it.”

He says the company’s prototype is able to produce holographic videos when provided with captured light fields or “synthetic data” including computer gaming, CGI and VFX - which are “relatively straightforward so as long as the appropriate metadata is provided.”

In addition to the capture of live-action light field content, Karafin suggests that any existing 2D content – a super-hero movie for example - can be converted into a form of light field through computational or artistic conversion.

“You can leverage every single piece of library content,” he says.

Other methods of existing capture can form the basis for light field. These include LiDAR laser scanning or range finding devices like Microsoft Kinect, which is commonly used in pre-visualisation; plenoptic camera rigs used to create specialist VFX sequences (famously The Matrix ‘bullet time’ effect), or systems like Intel’s FreeD which do a similar job on a wider scale by ringing sports stadia with high resolution cameras.

Google has snapped up remaining key members of Lytro to further its research and development.

It is experimenting with a modified a GoPro Odyssey Jump camera, bending it into a vertical arc of 16 cameras mounted on a rotating platform. The resulting light field is aligned and compressed in a custom dataset file that’s read by special rendering software Google has implemented as a plug-in for the Unity game engine.

As explained by Debevec, “Light field rendering allows us to synthesise new views of the scene anywhere within the spherical volume by sampling and interpolating the rays of light recorded by the cameras on the rig.”

It has demonstrated this on documentary subjects including the flight deck of Space Shuttle Discovery.

Facebook, meanwhile, has partnered with OTOY, developer of a cloud-based rendering technology for modelling light rays, to fuel its Virtual Reality ambitions.

Apple has registered a light field patent [no. 9,681,096] which appears to focus on augmented video conferencing.

Holographic content creation may also have a profound impact on narrative storytelling.

“It’s a new storytelling device,” says Karafin. “A hologram of an actor promises to be as real as if you were in the same room talking to that person.”

He describes the experience as “like watching a play in a theatre in the round” where everyone sees the same narrative but views it from his or her own place in the audience.

“Our passion is for immersive displays that provide a social experience, instead of one where the audience members are blocked off from each other by headsets or other devices.

He envisions Light Field Lab’s panels not just stacked into a front facing wall, but forming “the side walls, floor and ceiling” of a venue. “Every direction will contain light from the holographic displays. When you have that, you can enable the Holodeck.”

“This is what we believe is the future in how we will interact with media. We have already proved the fundamentals of this technology. We know it sounds crazy, but every day the goal gets a little closer and we don’t sleep trying to make it happen - and why would you when you’re building holograms!”

Volumetric haptics

Yet the generation and display of holographs is just the first stage. Research is already turning to being able to touch virtual objects.

“When you start working in three dimensional media it is natural to want to interact with it and then you run into the problem of interface,” explains MIT’s Bove.

MIT’s students have designed an air-vortex to provide mid-air haptic feedback when a user touches virtual objects displayed on holographic, aerial, and other 3D displays.

“The system knows where your hand is because we track it. When you interact with the display’s imagery a nozzle drilled fitted to the display will aim a pulse of air around your fingers up to a metre’s distance. “There’s no fine textural detail - you can’t tell if the object is a sponge or a rock – but it is extensive and powerful.”

Similar technology has been tested by US military as a means of non-lethal crowd control. It could also be adapted for interface concepts where, according to Bove, “buttons float in space” and air pressure will feedback that a button has been pressed.

Light fields and the future of cinematography

One of the roadbumps to light field capture being taken seriously in Hollywood is the impact it could have on cinematography. Some DPs see it as a threat to their craft, given that the responsibility for fundamental decisions like composition and exposure can be recalibrated by technicians in post.

On the other hand, the technology might be embraced by a newer generation of photographer who might grow up with the creative possibilities of being able to move a ‘virtual’ camera around a 3D photorealistic set.

For example, researchers in the Moving Picture Technologies department at Germany’s Fraunhofer IIS have demonstrated how light field technology opens up the ability to change focus and depth of field in postproduction or the construction of stereo images from the depth information.

”Fraunhofer have made it possible for anyone to set up a camera array for capture of volumes, and then process them in a common image-processing framework,” says Simon Robinson, co-founder, VFX software developer, Foundry. “It still requires the adoption of new technology on-set, which will be a barrier to widespread use for some time.”

There are a number of published experiments in this area, not least of which is Foundry’s own research collaboration with Figment Productions and University of Surrey to create a a cinematic live-action VR experience, Kinch & The Double World, using light fields.

The longer term appeal is much more intriguing.

“If we can capture the light falling on a camera (rather than just 2D images made from that light) and we can have a post-production workflow that deals with light (not 2D images), and we can also have displays that shine the light back at the viewer, so they feel like they are looking through a window, then we’ve truly made a creative transition,” says Robinson.

A studio would have to be convinced that the burden of this technology (in rig size and in data size) provides benefits that outweigh the pain.

“The missing piece here is the display,” says Robinson. “Once we have genuine proof of light field displays that have the potential to reach consumers, then that changes the motivation completely. It’ll become worth the pain, to create something different.”

Camera developer Red is about to launch a smartphone intended to help cinematographers view and possibly even calibrate the z (depth) coordinates of a scene. Hydrogen One is believed to feature a 5.7-inch screen made by Silicon Valley display maker Leia Inc which uses ‘diffractive lightfield backlighting’ to show what Red calls 4-View (4V) holographics.

Red is also launching the ‘Hydrogen Network,’ platform to share or sell 4V content.

Just as cinematographers working over the last ten years have adjusted to working digitally, so the cinematographer of the not too distant future might be re-imagined as a sculptor of light, a description not a million miles removed from how many DPs already view their art..

Interested in immersive? The NextGen: Interactive + Immersive Experiences conference track at IBC2018 will provide insight into developments within the worlds of eSports, live production, AR and social.

1 Readers' comment