NAB 2019: Virtual set solutions powered by games engines proved a big draw for the live broadcast as well as the scripted market.

The fusion of games engine renders with live broadcast has taken virtual set solutions to another level with photoreal 3D graphic objects appearing indistinguishable from reality.

At NAB, all leading developments in this area come powered with Epic Games Unreal Engine. Originally designed to quickly render polygons, textures and lighting in video games, these engines can seriously improve the graphics, animation and physics of conventional broadcast character generators and graphics packages.

Every vendor also claims that their integration of Unreal Engine creates the most realistic content for virtual and mixed reality and virtual sets.

Some go further and suggest that their virtual production system transcends its real-time broadcast boundaries, providing real-time post-production and high-end content pre-visualisation for episodic and film market.

One of those is Brainstorm, the Spanish developer behind InfinitySet. The latest version of this software takes advantage of the Nvidia GPU technology and Unreal Engine 4 (UE4) for rendering. Nvidia’s SLI technology can connect several GPUs in parallel, multiplying the performance accordingly, so that InfinitySet can deliver real-time ray tracing for much more accurate rendering, especially with complex light conditions.

“Ray tracing offers more natural, more realistic rendered images, which is essential for photorealistic content generation,” Héctor Viguer, Brainstorm’s chief technology officer and innovation director explained. “InfinitySet can create virtual content which can’t be distinguished from reality.”

These developments open the door, he says, for content providers to create “amazingly rendered” backgrounds and scenes for drama or even film production, “significantly reducing costs”.

He claimed: “For other broadcast applications such as virtual sets or AR, InfinitySet provides unmatched, hyper-realistic quality both for backgrounds and graphics.”

Like competing systems, InfinitySet works as a hub system for a number of technologies and hardware required for virtual set and augmented reality operation, such as hardware chroma keyers, tracking devices, cameras and mixers.

Vizrt’s Viz Engine 4, for example, includes a built-in asset management tool, downstream keyers, switcher functionality, and DVEs. The company billed its NAB update as one of the most important ever, presenting its new Reality Fusion render pipeline for delivering more realistic effects and real-time performance. Integration with Unreal Engine 4 adds the flexibility of having backdrops with physical simulations such as trees blowing in the wind, combined with a template-driven workflow for foreground graphics.

“The real and the virtual digital worlds are converging, setting the scene for artistic and commercial opportunities on a massive scale.” Halvor Vislie, Pixotope

The release uses physical-based rendering and global illumination among other techniques “to achieve realism for virtual studios and AR graphics to levels never seen before,” said Vizrt chief technology officer Gerhard Lang.

ChyronHego offered Fresh, a new graphics-rendering solution based on UE4.

“News, weather, and sports producers no longer need to struggle with multiple types of render engines and toolsets, which can result in varying degrees of quality,” contended Alon Stoerman, the ChyronHego’s senior product manager for live production solutions.

“This means producers are able to tell a better story through AR graphics that look orders-of-magnitude better than graphics created with traditional rendering engines. They’re also able to do it faster and easier than ever before, since Fresh can be operated as an integral part of the rundown. These are truly unique capabilities in the industry.”

He explained that a built-in library of 3D graphic objects sets Fresh apart from competitor systems that require the broadcast elements to be created in a traditional graphics-rendering engine and then added as a separate layer on top of the Unreal scene.

“Not only does this requirement add more time and complexity— a liability during a breaking news or weather event — but the resulting graphics lack the realism and ‘look’ of the gaming engine,” Stoerman argued. “With Fresh the graphics are rendered as part of the UE4 scene and carry the same photorealistic and hyper-realistic look as the other scene elements.”

Ross Video had adapted The Future Group’s (TFG) Unreal Engine broadcast graphics software into its virtual set and robotics camera solutions but has now parted ways with the Oslo-based developer.

Instead, it is offering its own UE4- virtual studio rendering package called Voyager. It works with Ross’ control software and will work with a variety of tracking protocols and camera mounts.

“Ross has worked hard over the last few years to put Unreal based rendering on the map for virtual production,” said Jeff Moore, EVP at Ross. “We see our in-house development of an Unreal-based system as a natural evolution of our ability to provide more complete solutions and this liberates us to move at a faster pace, without external constraints.”

The Future Group, meanwhile, has been evolving its technology. Rebranded Pixotope (from Frontier) the company makes extravagant claims for the software.

“With Pixotope, we take the incredible 3D, VR, AR and data technology that’s emerging at an ever-increasing rate, and make it possible - easy, even - to use it in every production, at almost any budget,” said Halvor Vislie, chief executive officer.

“The real and the virtual digital worlds are converging, setting the scene for artistic and commercial opportunities on a massive scale. Mixed reality, interactive media productions can engage audiences in new ways, opening up new business models.”

The software runs on commodity hardware and is available for subscription. It’s a model that will change the virtual production landscape forever, TFG claim.

“It’s transformative for the industry,” Vislie said. “All the power, quality and stability demanded by broadcasters, without the need for expensive, proprietary hardware. And no massive capital outlay: just an easy monthly payment.”

Pixotope includes a real-time compositing engine working at 60fps in HD and 4K and claims perfect synchronisation between the rendered objects and the live action. In a partnership with Ikinema and motion tracking technology firm Xsens, TFG is offering a real-time production process for capturing AR character or ‘talent interactive content’.

“Ross has worked hard over the last few years to put Unreal based rendering on the map for virtual production.” said Ross EVP Jeff Moore

“Real time animation and live virtual character puppeteering … is one of the most costly and difficult types of production to do. We have collectively created the solution our customers are looking for” added chief technology officer Marcus Brodersen.

With Arraiy, a provider of computer vision solutions, TFG aims to deliver AI-based real-time VFX. By the end of 2019, Arraiy will release tools to perform real-time matting without green screen and another to generate real-time depth extraction and occlusion solving, both of which will be added to Pixotope.

UK broadcast graphics company Moov, whose clients include Sky and BT Sport, announced at NAB that it would use Pixotope for use on virtual studio and augmented reality graphics projects.

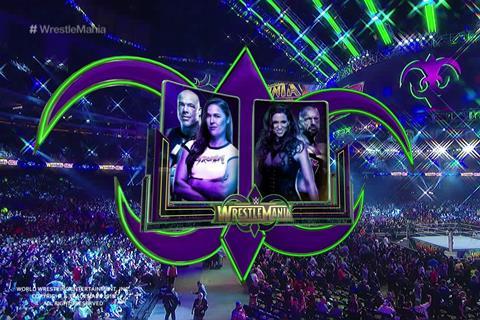

- Read more: The making of the ’Hazard Hologram’

Virtual Reality was little in evidence at NAB, as the technology takes a back seat until 5G enables realtime high-resolution high-fidelity 360-broadcasts and more robust ways of making money from its production are realised. One of the leading live VR producers, NextVR, did not exhibit in Las Vegas.

Sports graphics specialist FingerWorks, though, showed how 3D stereo graphics could output for viewing in head mounted displays from PlayStation VR, Gear VR, Vive or Facebook.

“Broadcast VR cameras record the two ‘eyes’ of the stereo VR image. Our graphical telestrator technology is inserted on the broadcast side by an operator or analyst. They then stream out equirectangular images to the headsets where they are stitched together to present a smooth global visual presentation,” explained FingerWorks.

Sony previewed a development of its Hawk-Eye graphics tool that can now be used to generate 3D models of a game. The system tracks 17 different points on the skeleton of every player as well as the ball via six cameras around the field to create a large dataset. Combined with virtual graphics it would allow a fan user to see a 3D model of the game and then move around the 3D world.

Ncam Technologies unveiled the latest version of its camera tracking solution, Ncam Reality, which supports an additional camera bar for tracking environments that are subject to dynamic lighting changes.

Ncam chief executive Nic Hatch said: “This new release solves many of the common challenges faced by AR users, as well as providing new features that will greatly enhance AR projects, whether for VFX pre-visualisation, real-time VFX, virtual production, or live, real-time broadcast graphics.”

VR on hold

Although 10.6 million ‘integrated display’ VR Head Mounted Displays (including console, PC and all-in-one headsets) shipped in 2018, with an additional 28 million mobile phone based VR viewers shipped, analyst Futuresource Consulting expects the pace of uptake in VR hardware to remain at modest levels for the foreseeable future, with limited content available engaging with the mass market.

In the long-term, the outlook for VR remains positive, Futuresource suggests, with VR technology and hardware continuing to develop and improve user experience. “Access to and the availability of successful VR content continues to be a sticking point throughout the industry, with content development caught between a currently small active base of VR users, limiting the potential sales for VR software, and the substantial investment required to produce high quality VR experiences,” said analyst James Manning Smith.

“We expect that as the installed base of VR headsets grows, there will be further interest in content creation as the potential eyeballs and players for VR content creates a more lucrative market.”

No comments yet