This paper offers a comprehensive overview of state-of-the-art volumetric video methods based on neural radiance fields, including their respective advantages and drawbacks.

Over the past decades, video consumption and video devices have become widespread globally. In 2014, mainstream virtual reality headsets marked a pivotal moment for 360° video accessibility. Advanced immersive devices, like the Apple Vision Pro as well as smartphones and tablets with advanced spatial capabilities can now provide users with real-time 6 Degrees of Freedom (6DoF) navigation experiences. However, the lack of engaging content is hindering potential applications in areas such as training and entertainment. Volumetric video is a promising solution. However, its production poses challenges, such as the need for natural 3D+t reconstruction, coding, and rendering, which still require intensive computational resources.

In 2020, the ground-breaking Neural Radiance Field (NeRF) paper introduced a new way to generate natural free-viewpoint renderings of real scenes from sparsely captured views. Follow-up research has led to faster and more flexible methods, such as the widely used 3D Gaussian Splatting. However, these approaches require independent models for each frame, posing a challenge for volumetric video representation. To address temporal limitations, extensions of radiance field techniques use temporal redundancy to create a compact, temporally consistent, and editable volumetric video representation.

This paper offers...

You are not signed in.

Only registered users can view this article.

IET announce Best of IBC Technical Papers

The IET have announced the publication of The best of IET and IBC 2024 from IBC2024, once again showcasing the groundbreaking research presented through the papers. The papers have been selected by IBC’s Technical Papers Committee for being novel, topical, analytical and well-written and which have the potential to make a significant impact upon the media industry. 327 papers were submitted this year, and after a rigorous selection process this publication features the ten papers deemed by the judges to be the best.

Technical Papers 2024 Session: 5G Case Studies – public network slicing trials and striving for low latency

In this session from IBC2024, Telestra Broadcast Service and the BBC present their work 5G Case Studies as part of the IBC Technical Papers.

Technical Papers 2024 Session: AI in Production – training and targeting

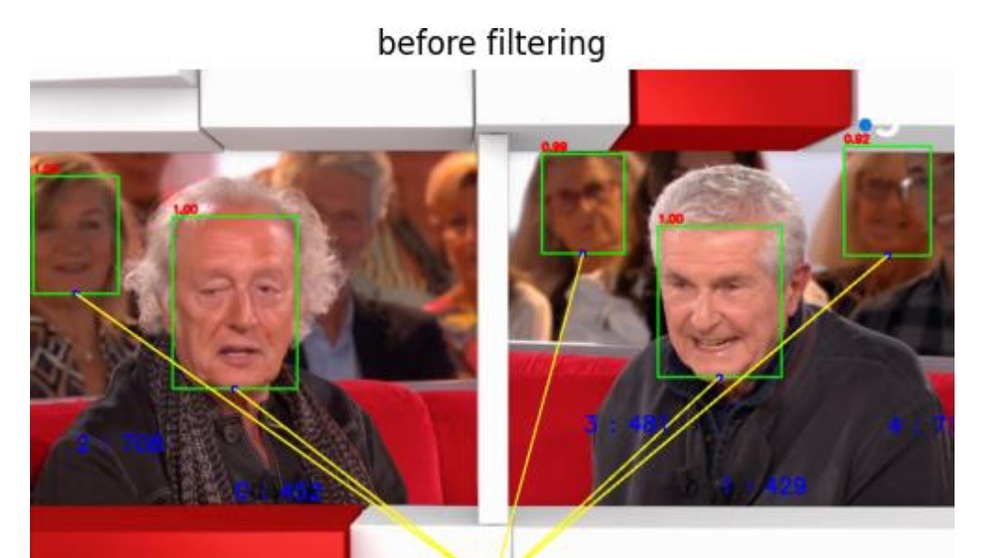

In this session from IBC2024, three authors from NHK, Viaccess-Orca and European Broadcasting Union present their work on the application of AI to media production as part of the IBC Technical Papers.

Technical Papers 2024: Audio & Speech – advances in production

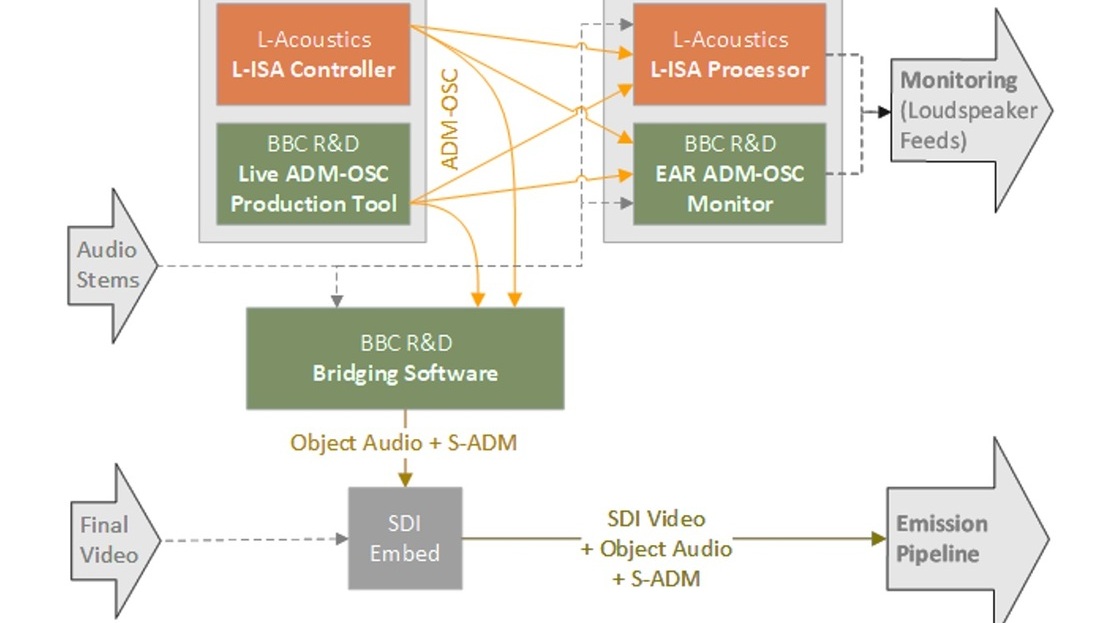

In this session from IBC2024, two authors present their work on Audio Description and implementing Audio Definition Model as part of the IBC Technical Papers.

Technical Papers 2024 Session: Advances in Video Coding – encoder optimisations and film grain

In this session from IBC2024, IMAX, MediaKind, Fraunhofer HHI and Ericsson present their work on video coding, as part of the IBC Technical Papers