This paper demonstrates that our LMM-based approach not only significantly reduces the computational complexity required for sampling based per-title video encoding—by an astounding 13 times—but also maintains the same level of bitrate saving. These findings not only pave the way for more efficient and adaptive video encoding strategies but also highlight the potential of multi-modal models in enhancing multimedia processing tasks.

In the realm of video encoding, achieving the optimal balance between encoding efficiency and computational complexity remains a formidable challenge. This paper introduces a groundbreaking framework that utilizes a Large Multi-modal Model (LMM) to revolutionize the process of per-title video encoding optimization. By harnessing the predictive capabilities of LMMs, our framework estimates the encoding complexity of video content with unprecedented accuracy, enabling the dynamic selection of encoding configurations tailored to each video’s unique characteristics.

The proposed framework marks a significant departure from traditional per-title encoding methods, which often rely on expensive and time-consuming sampling in the rate-distortion space. Through a comprehensive set of experiments, we demonstrate that our LMM-based approach not only significantly reduces the computational complexity required for sampling based per-title video encoding—by an astounding 13 times—but also maintains the same level of bitrate saving.

The implications of this research...

You are not signed in.

Only registered users can view this article.

IET announce Best of IBC Technical Papers

The IET have announced the publication of The best of IET and IBC 2024 from IBC2024, once again showcasing the groundbreaking research presented through the papers. The papers have been selected by IBC’s Technical Papers Committee for being novel, topical, analytical and well-written and which have the potential to make a significant impact upon the media industry. 327 papers were submitted this year, and after a rigorous selection process this publication features the ten papers deemed by the judges to be the best.

Technical Papers 2024 Session: 5G Case Studies – public network slicing trials and striving for low latency

In this session from IBC2024, Telestra Broadcast Service and the BBC present their work 5G Case Studies as part of the IBC Technical Papers.

Technical Papers 2024 Session: AI in Production – training and targeting

In this session from IBC2024, three authors from NHK, Viaccess-Orca and European Broadcasting Union present their work on the application of AI to media production as part of the IBC Technical Papers.

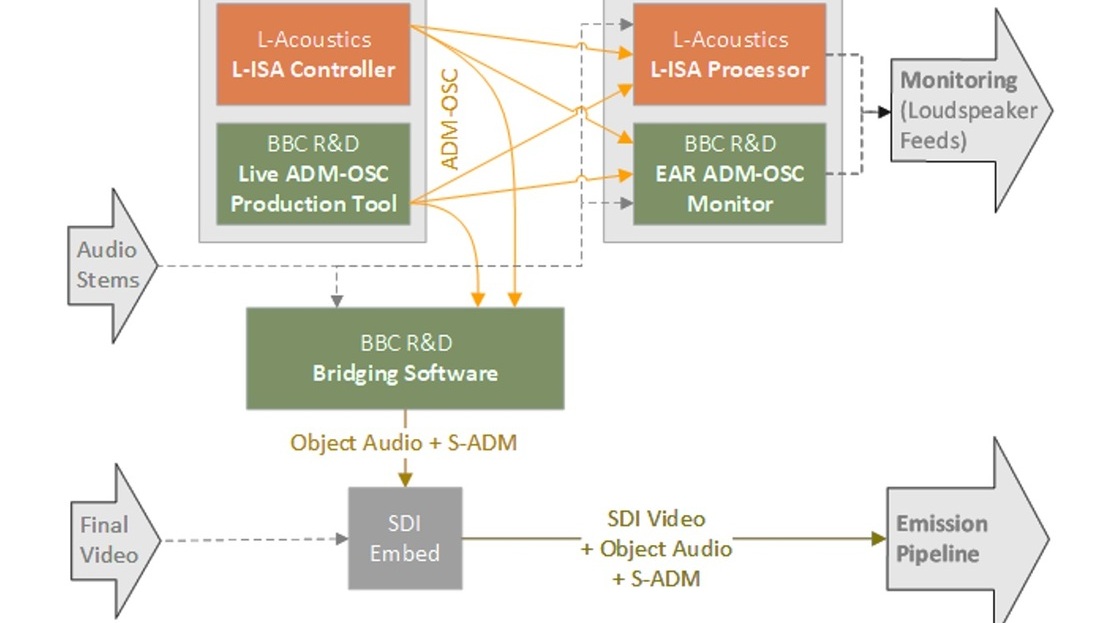

Technical Papers 2024: Audio & Speech – advances in production

In this session from IBC2024, two authors present their work on Audio Description and implementing Audio Definition Model as part of the IBC Technical Papers.

Technical Papers 2024 Session: Advances in Video Coding – encoder optimisations and film grain

In this session from IBC2024, IMAX, MediaKind, Fraunhofer HHI and Ericsson present their work on video coding, as part of the IBC Technical Papers