Connecting communities

Bringing together the global media, entertainment and technology community, IBC2024 welcomed 45,085 visitors from 170 countries to connect, showcase and discover innovations, tackle pressing industry challenges, and explore new opportunities.

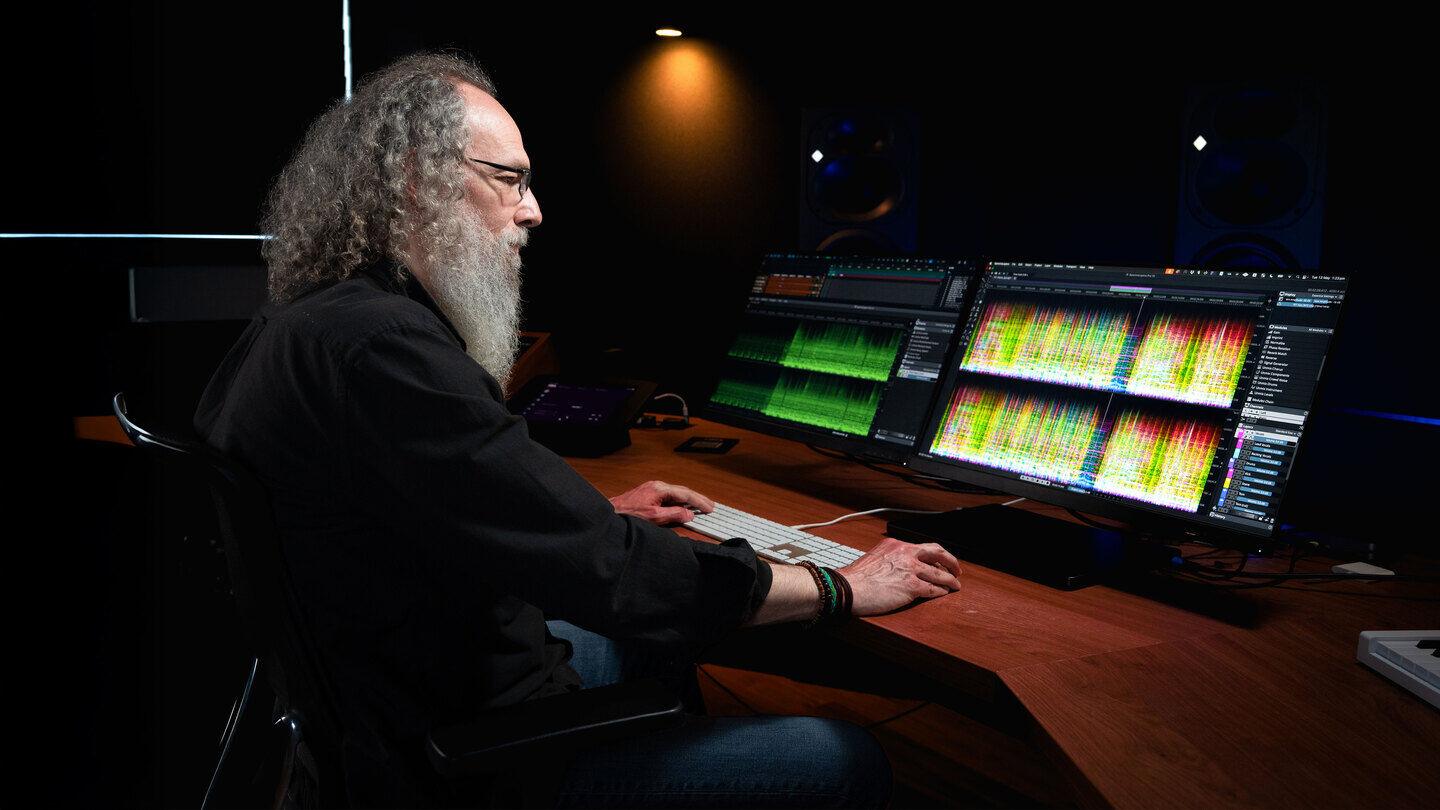

Across a bustling show floor and packed theatres, IBC2024 addressed critical trends and issues driving change across the media landscape while offering new show features, such as the AI Tech Zone, and IBC Talent Programme.

Themes that took centre stage included AI, fighting disinformation in news, sustainability, 5G, cloud, esports, immersive experiences, over-the-top (OTT) and streaming, adtech, metaverse, edge computing, the need to foster talent across the industry and many more.

IBC will be back at the RAI, Amsterdam from 12-15 September 2025.

.jpg)